vSAN is VMware’s approach to Software Defined Storage. We are not going to explain the ins and outs of vSAN, but do want to provide a basic understanding for those who have never done anything with it. vSAN leverages host local storage and creates a shared data store out of it.

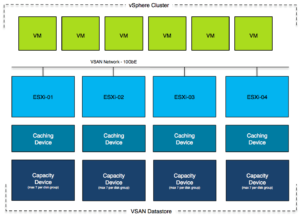

Figure 1 – Virtual SAN Cluster

Figure 1 – Virtual SAN Cluster

vSAN requires a minimum of 3 hosts and each of those 3 hosts will need to have 1 SSD for caching and 1 capacity device (can be SSD or HDD). Only the capacity devices will contribute to the available capacity of the datastore. If you have 1TB worth of capacity devices per host then with three hosts the total size of your datastore will be 3TB.

Having that said, with vSAN 6.1 VMware introduced a “2-node” option. This 2-node option is actually 2 regular vSAN nodes with a third “witness” node.

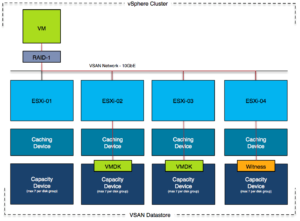

The big differentiator between most storage systems and vSAN is that availability of the virtual machine’s is defined on a per virtual disk or per virtual machine basis. This is called “Failures To Tolerate” and can be configured to any value between 0 (zero) and 3. When configured to 0 then the virtual machine will have only 1 copy of its virtual disks which means that if a host fails where the virtual disks are stored the virtual machine is lost. As such all virtual machines are deployed by default with Failures To Tolerate(FTT) set to 1. A virtual disk is what vSAN refers to as an object. An object, when FTT is configured as 1 or higher, has multiple components. In the diagram below we demonstrate the FTT=1 scenario, and the virtual disk in this case has 2 “data components” and a “witness components”. The witness is used as a “quorom” mechnanism.

Figure 2 – Virtual SAN Object model

Figure 2 – Virtual SAN Object model

As the diagram above depicts, a virtual machine can be running on the first host while its storage components are on the remaining hosts in the cluster. As you can imagine from an HA point of view this changes things as access to the network is not only critical for HA to function correctly but also for vSAN. When it comes to networking note that when vSAN is configured in a cluster HA will use the same network for its communications (heartbeating etc). On top of that, it is good to know that VMware highly recommends 10GbE to be used for vSAN.

Basic design principle: 10GbE is highly recommend for vSAN, as vSphere HA also leverages the vSAN network and availability of VMs is dependent on network connectivity ensure that at a minimum two 10GbE ports are used and two physical switches for resiliency.

The reason that HA uses the same network as vSAN is simple, it is too avoid network partition scenarios where HA communications is separated from vSAN and the state of the cluster is unclear. Note that you will need to ensure that there is a pingable isolation address on the vSAN network and this isolation address will need to be configured as such through the use of the advanced setting “das.isolationAddress0”. We also recommend to disable the use of the default isolation address through the advanced setting “das.useDefaultIsolationAddress” (set to false).

When an isolation does occur the isolation response is triggered as explained in earlier chapters. For vSAN the recommendation is simple, configure the isolation response to “Power Off, then fail over”. This is the safest option. vSAN can be compared to the “converged network with IP based storage” example we provided. It is very easy to reach a situation where a host is isolated all virtual machines remain running but are restarted on another host because the connection to the vSAN datastore is lost.

Basic design principle: Configure your Isolation Address and your Isolation Policy accordingly. We recommend selecting “power off” as the Isolation Policy and a reliable pingable device as the isolation address. It is recommended to configure the Isolation Policy to “power off”.

What about things like heartbeat datastores and the folder structure that exists on a VMFS datastore, has any of that changed with vSAN. Yes it has. First of all, in a “vSAN” only environment the concept of Hearbeat Datastores is not used at all. The reason for this is straight forward, as HA and vSAN share the same network it is safe to assume that when the HA heartbeat is lost because of a network failure so is access to the vSAN datastore. Only in an environment where there is also traditional storage the heartbeat datastores will be configured, leveraging those traditional datastores as a heartbeat datastore. Note that we do not feel there is a reason to introduce traditional storage just to provide HA this functionality, HA and vSAN work perfectly fine without heartbeat datastores.

Normally HA metadata is stored in the root of the datastore, for vSAN this is different as the metadata is stored in the VMs namespace object. The protectedlist is held in memory and updated automatically when VMs are powered on or off.

Now you may wonder, what happens when there is an isolation? How does HA know where to start the VM that is impacted? Lets take a look at a partition scenario.

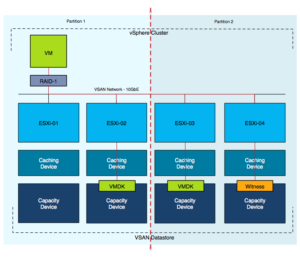

Figure 3 – VSAN Partition scenario

Figure 3 – VSAN Partition scenario

In this scenario there a network problem has caused a cluster partition. Where a VM is restarted is determined by which partition owns the virtual machine files. Within a vSAN cluster this is fairly straight forward. There are two partitions, one of which is running the VM with its VMDK and the other partition has a VMDK replica and a witness. Guess what happens? Right, vSAN uses the witness to see which partition has quorum and based on that result, one of the two partitions will win. In this case, Partition 2 has more than 50% of the components of this object and as such is the winner. This means that the VM will be restarted on either “esxi-03″ or “esxi-04″ by vSphere HA. Note that the VM in Partition 1 will be powered off only if you have configured the isolation response to do so. We would like to stress that this is highly recommended! (Isolation response –> power off)

One final thing which is different for vSAN is how a partition is handled in a stretched cluster configuration. In a regular stretched cluster configuration using VMFS/NFS based storage VMs impacted by APD or PDL will be killed by HA. With vSAN this is slightly different. HA VMCP in 6.0 is not supported with vSAN unfortunately. vSAN has its own mechanism. vSAN recognizes when a VM running on a group of hosts has no access to any of the components. When this is the case vSAN will simply kill the impacted VM. You can disable this behavior, although we do not recommend doing this, by setting the advanced host setting called VSAN.AutoTerminateGhostVm to 0.